Explainable

AI

Kemal Erdem, Piotr Mazurek, Piotr Rarus

Agenda

- Why XAI and Where XAI?

- Which XAI? (available methods)

- Integrated Gradients

- How to use it?

- Potential issues with XAI methods

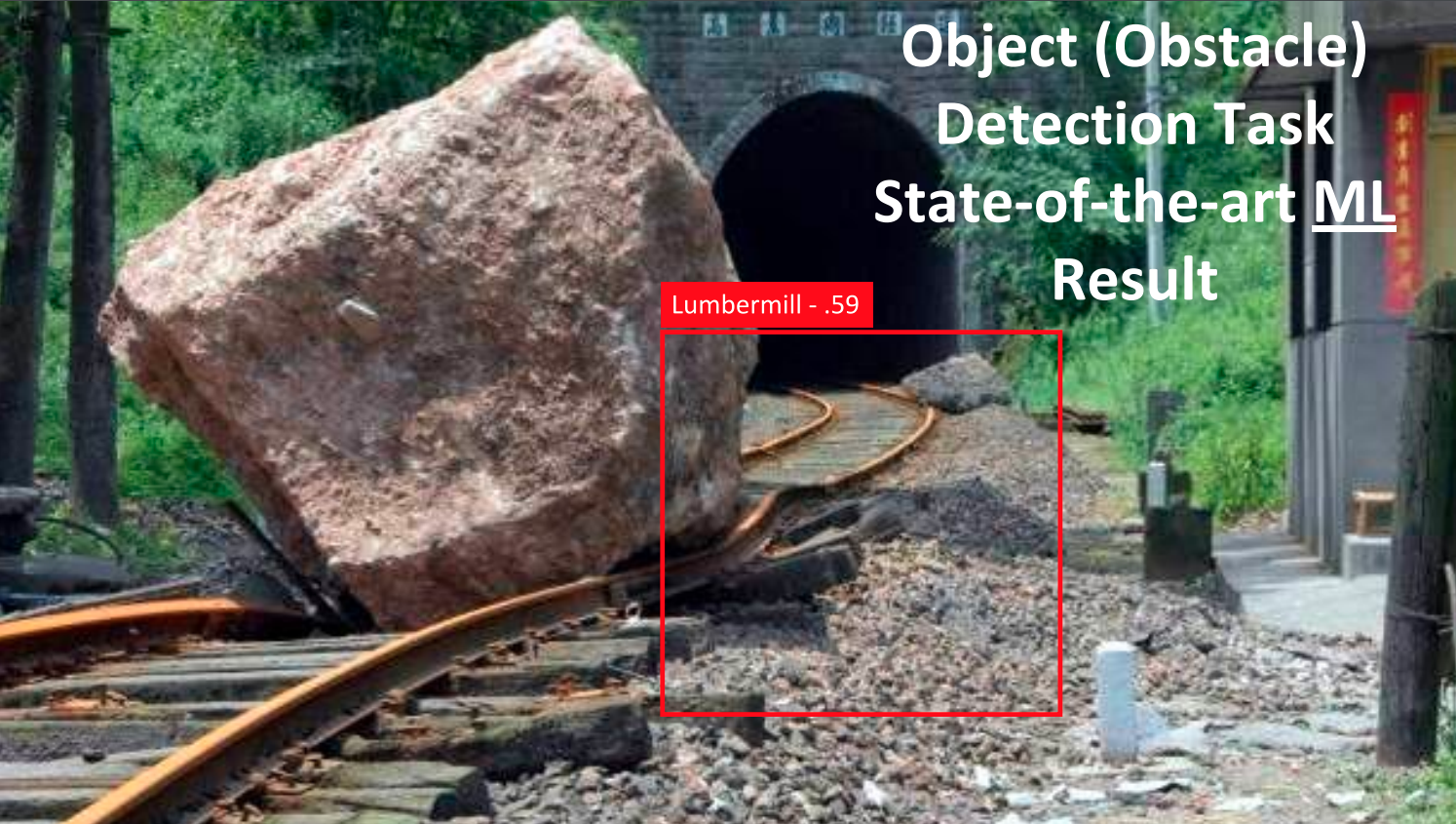

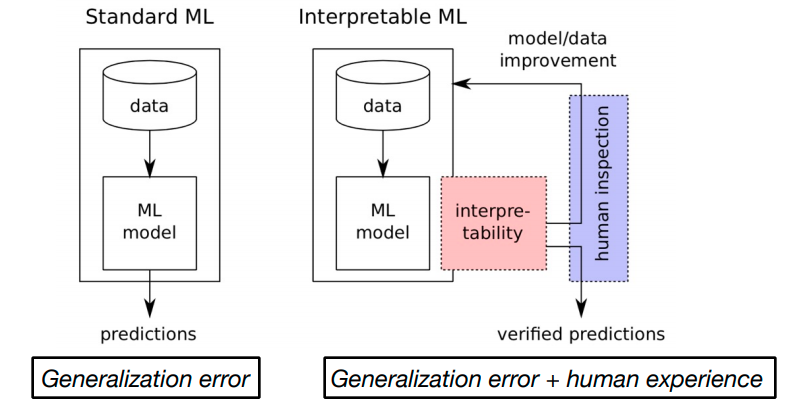

Source: AAAI'20 Conference

Source: AAAI'20 Conference

Source: W. Samek, A. Binder, MICCAI’18

Source: W. Samek, A. Binder, MICCAI’18

Source: Google DeepMind beating world champion Lee Se-Dol at GO

Source: Google DeepMind beating world champion Lee Se-Dol at GO

"Article 22 of GDPR empowers individuals with the right to demand an explanation of how an AI system made a decision that affects them" - European Commission

"Provide an assessment of the risks posed by the automated decision system to the privacy or security and the risks that contribute to inaccurate, unfair, biased, or discriminatory decisions impacting consumers" - Algorithmic Accountability Act 2019

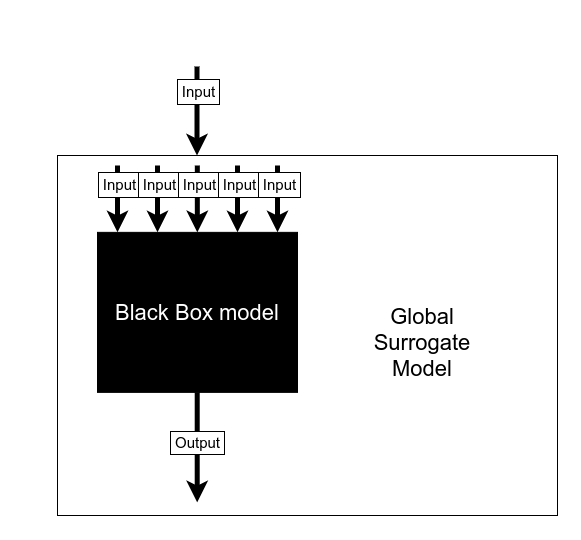

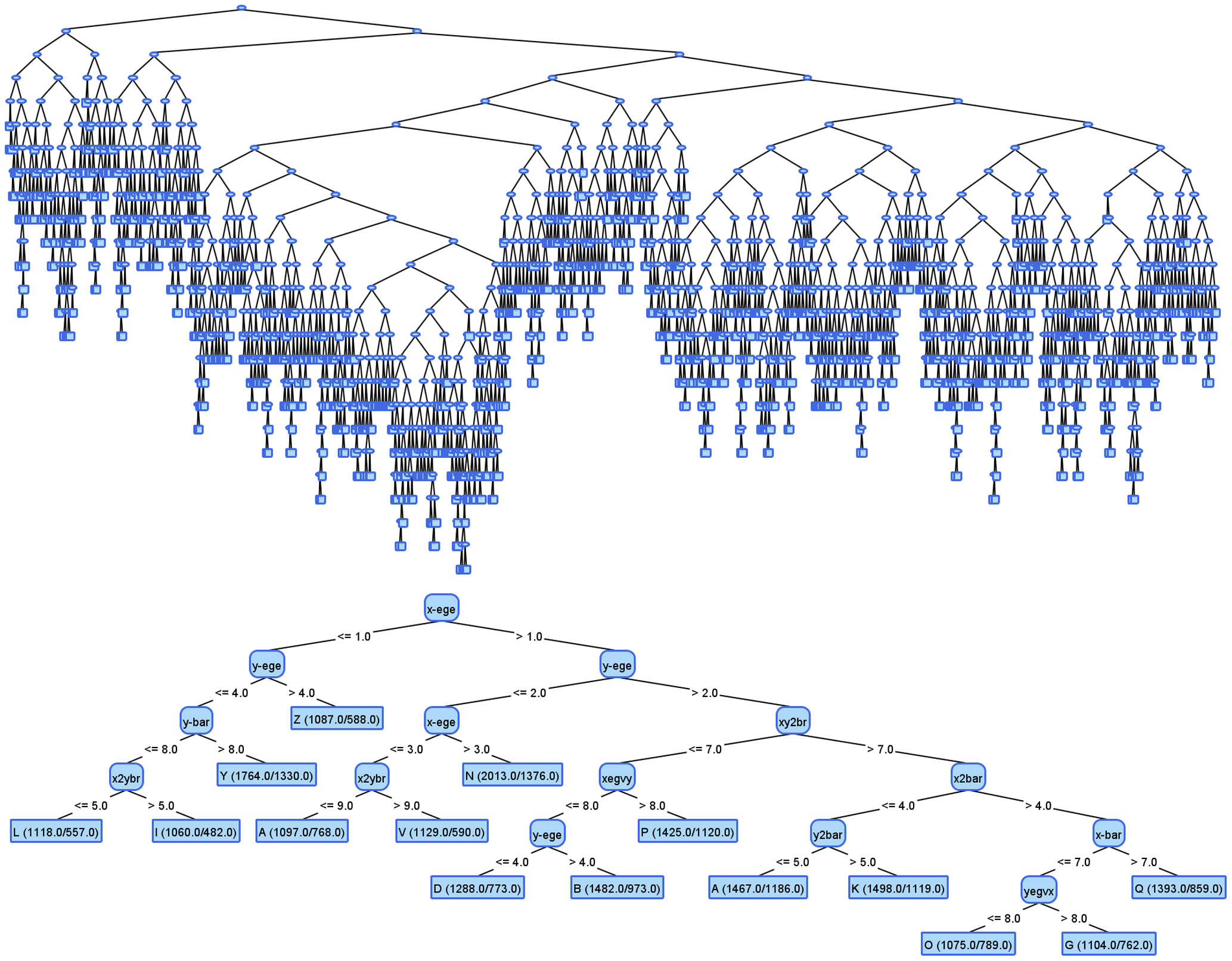

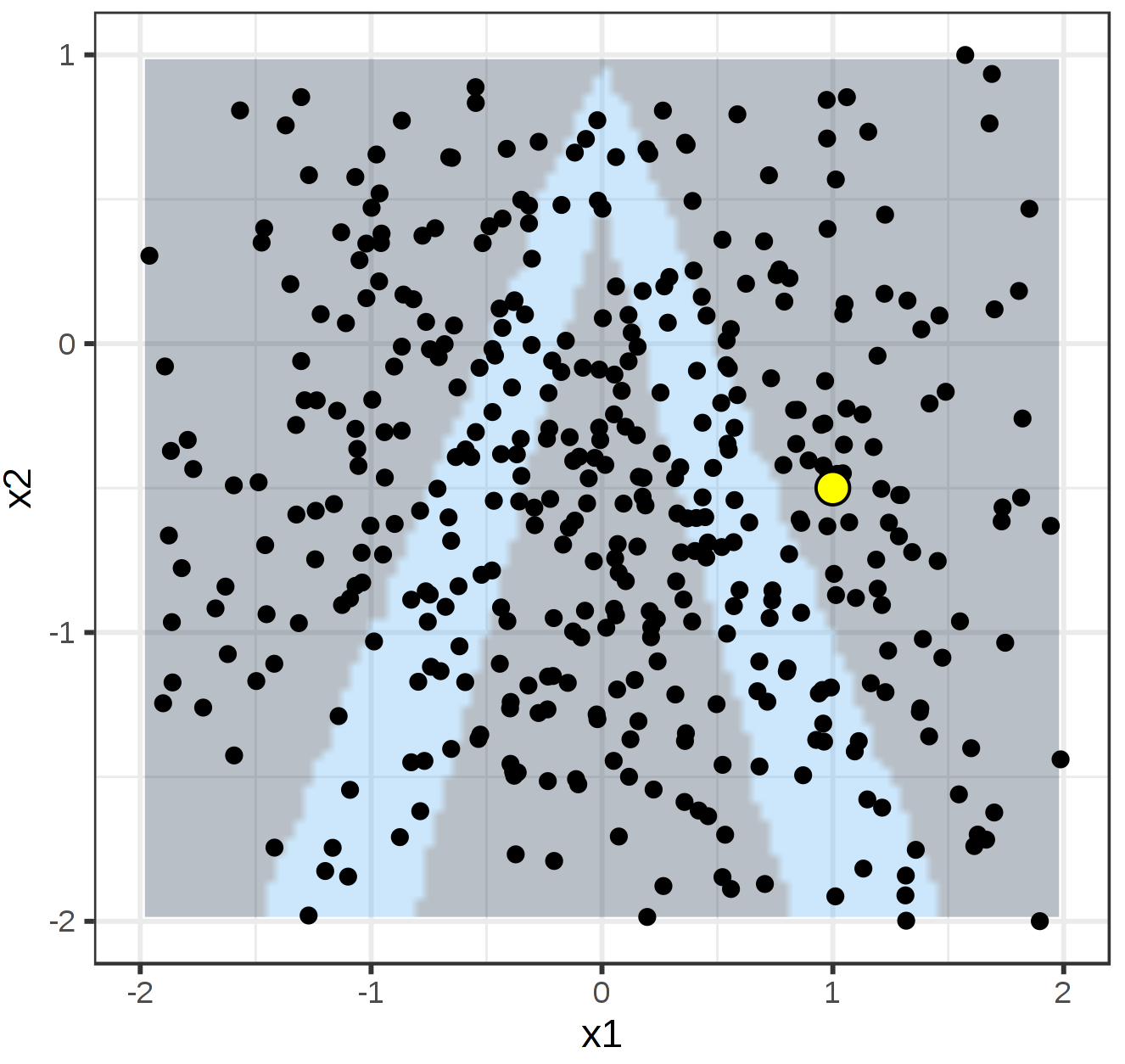

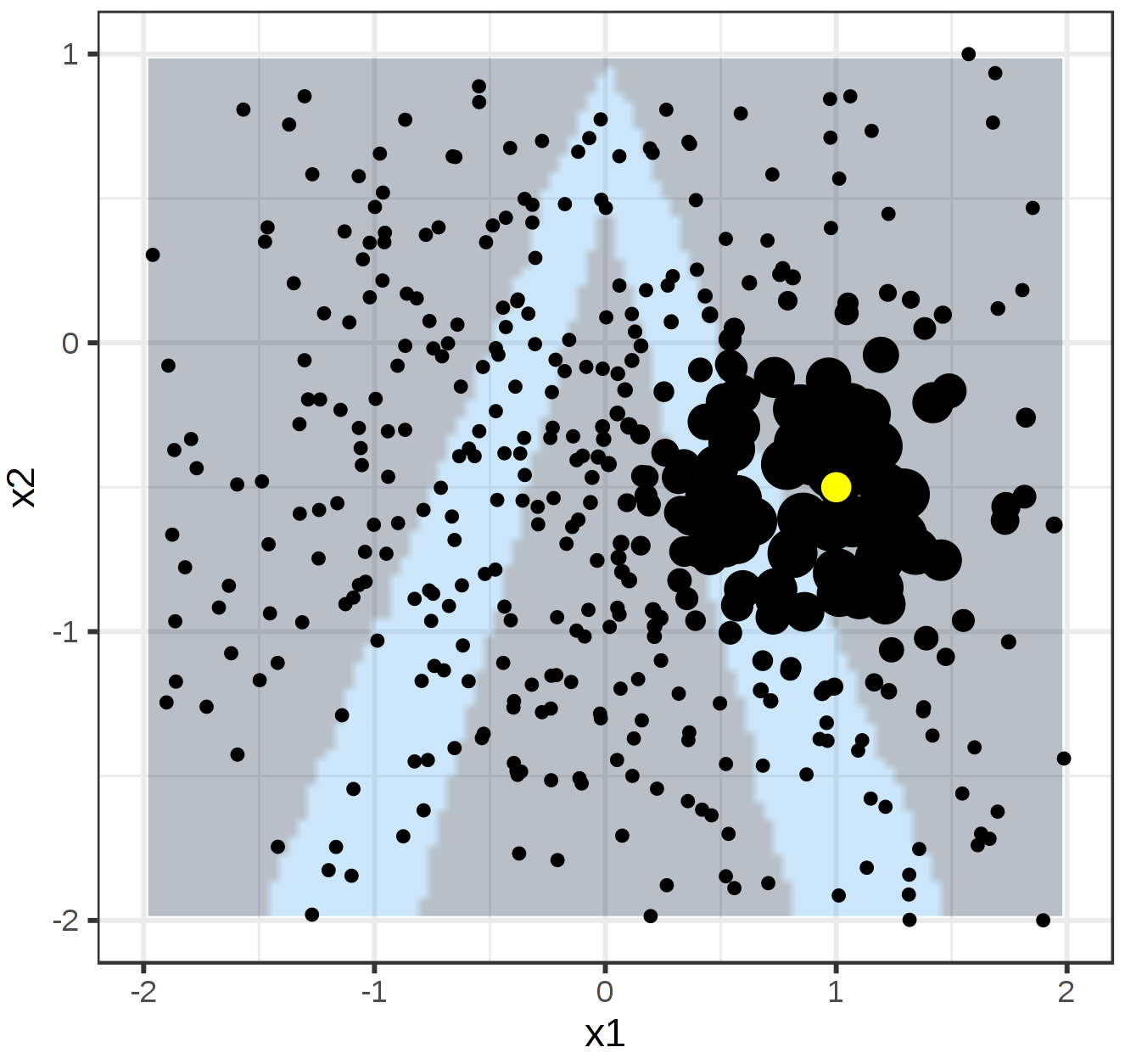

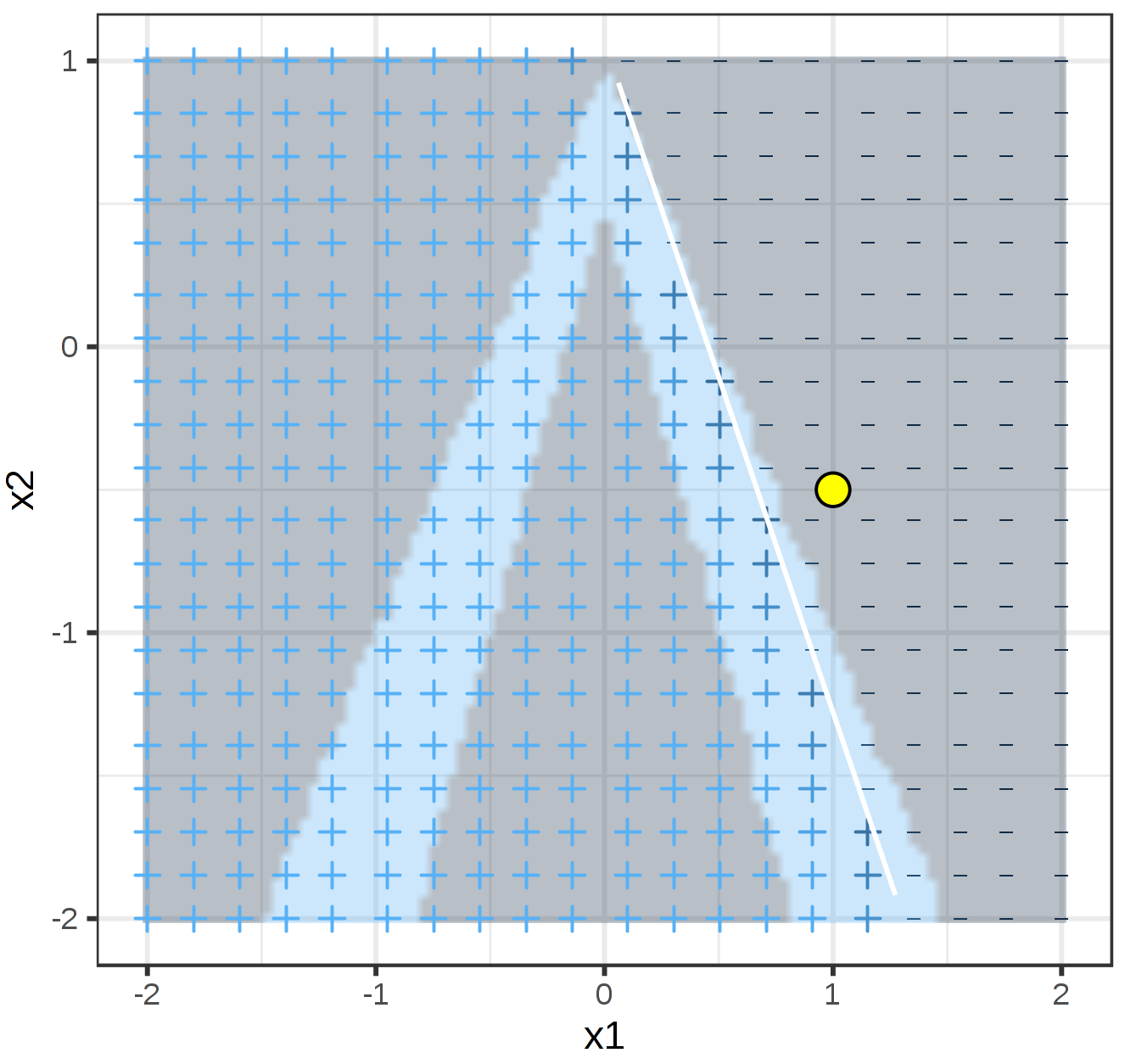

- Surrogate Model Based

- Attribution Based

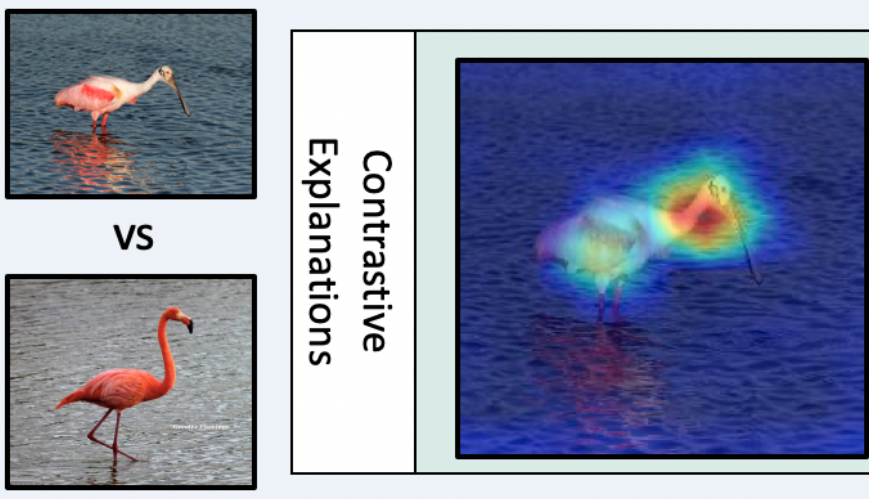

- Contrastive Explanations

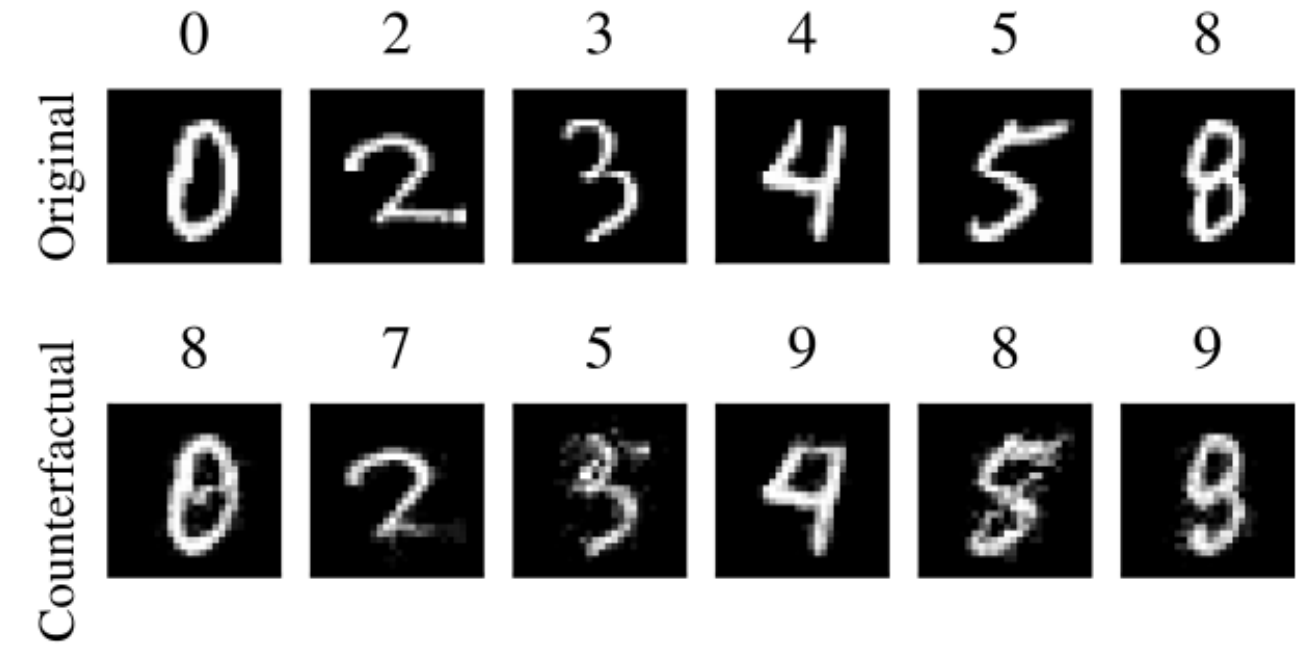

- Counterfactual / Recourse Based

- Example similarity

Black Box model

Black Box model

Global Surrogate model

Global Surrogate model

You have access to the model's structure

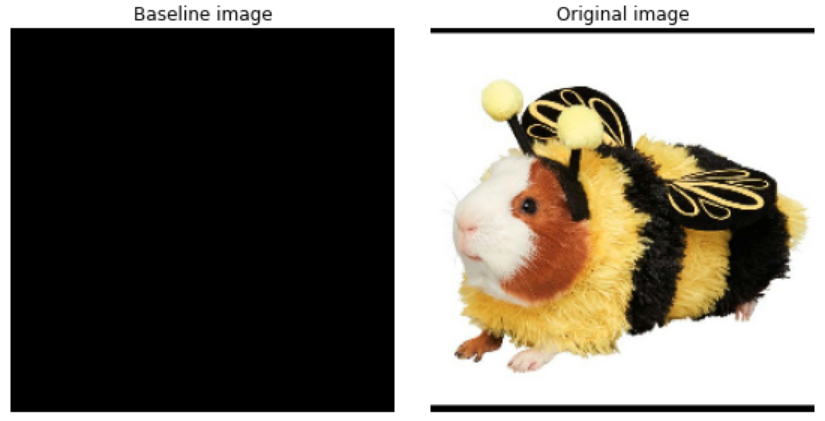

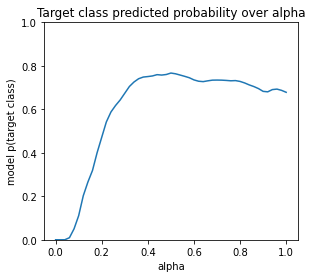

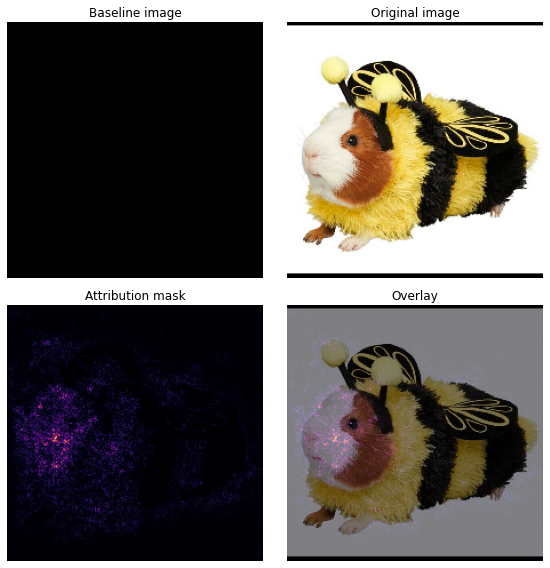

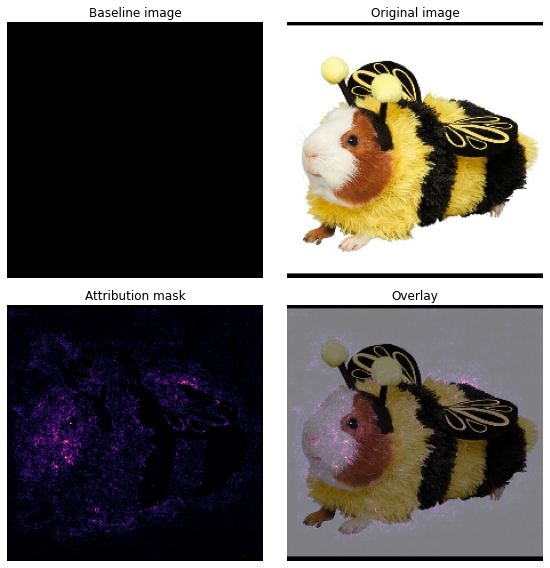

$$ IntegratedGrads_{i}(x) ::= (x_{i} - x'_{i})\times\int_{\alpha=0}^1\frac{\partial F(x'+\alpha \times (x - x'))}{\partial x_i}{d\alpha} $$ $$ IntegratedGrads^{approx}_{i}(x)::=(x_{i}-x'_{i})\times\sum_{k=1}^{m}\frac{\partial F(x' + \frac{k}{m}\times(x - x'))}{\partial x_{i} } \times \frac{1}{m} $$

Source: Axiomatic Attribution for Deep Networks - M. Sundararajan, A. Taly, Q. Yan- $i$ - feature

- $x$ - input (image)

- $x'$ - baseline (image)

- $\alpha$ - interpolation constant

- $k$ - scaled interpolation constant

- $m$ - number of steps

- $(x_{i}-x'_{i})$ - scale factor

- $F()$ - model's prediction function

- $\frac{\partial F}{\partial x_{i} }$ - gradient relative to feature $x_i$

Interpolation process

Interpolation process

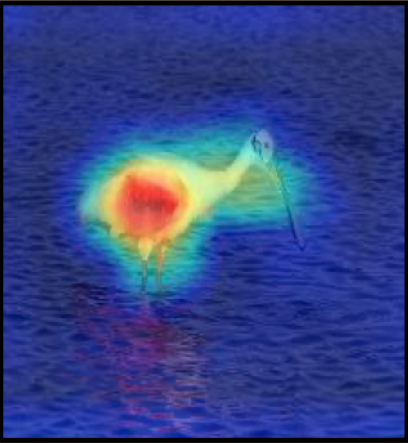

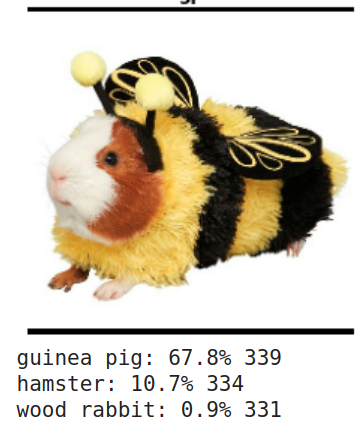

Why Spoonbill, rather than Flamingo?

How to change X to predict Y?

Author: Andrej Karpathy, generated from ILSVRC 2012 images

Author: Andrej Karpathy, generated from ILSVRC 2012 images

How to use it

Available Tools

How to use it

Available Tools

- Captum

- tf-explain

- Lime

- SHAP

Captum

- Dedicated to interpret torch-based models

- Developed by Facebook under Facebook Open Source

- Available on pypi

- Used to develop and troubleshoot models

- Can be used on production to help users understand model's prediction

Available Methods

- Primary Attribution: Evaluates contribution of each input feature to the output of a model.

- Integrated Gradients

- Gradient SHAP

- Saliency

- Guided Backpropagation, Deconvolution

- Guided GradCAM

- Layer Attribution: Evaluates contribution of each neuron in a given layer to the output of the model.

- Layer Conductance

- Internal Influence

- Layer Activation

- GradCAM

- Neuron Attribution: Evaluates contribution of each input feature on the activation of a particular hidden neuron.

- Neuron Conductance

- Neuron Integrated Gradients

- Neuron GradientSHAP

Example

from captum.attr import GuidedGradCam

from torchvision import models

# Load pretrained AlexNet

alexnet = models.alexnet(pretrained=True)

alexnet.eval()

# Create object to interpret the model

# To make GradCam work we pass reference to last conv layer

guided_gc = GuidedGradCam(alexnet, alexnet.features[10])

# Predict output on data

out = alexnet(batch)

# Point out classes with highest score

score, index = out.max(1)

# Use interpreter to calculate attributions

attributions = guided_gc.attribute(batch_t, index)

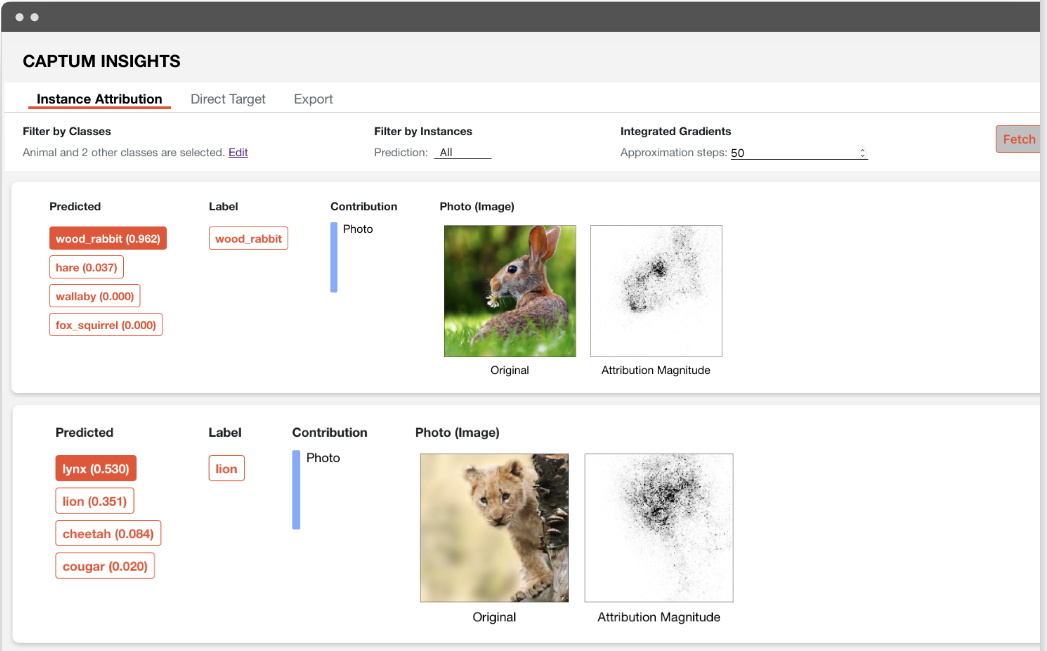

Captum Insights

import torch

import torch.nn.functional as F

from captum.insights import AttributionVisualizer

from captum.insights.features import ImageFeature

from torchvision import models

# Load pretrained AlexNet

alexnet = models.alexnet(pretrained=True)

alexnet.eval()

# Launch visualization inside the notebook

visualizer = AttributionVisualizer(

models=[alexnet],

score_func=lambda o: F.softmax(o, 1),

classes=[...], # class labels

features=[

ImageFeature(

"Photo",

baseline_transforms=[...],

input_transforms=[...],

)

],

dataset=...,

)

visualizer.render()

Captum Insights

tf-explain

- Dedicated to interpret TensorFlow-based models

- No official backing from a large company

- Available on pypi

- Used to develop and troubleshoot models

- Can be used on production to help users understand model's prediction

Available Methods

- Activations Visualization

- Vanilla Gradients

- Gradients*Inputs

- Occlusion Sensitivity

- Grad CAM

- SmoothGrad

- Integrated Gradients

Usage - Core API

from tf_explain.core.grad_cam import GradCAM

explainer = GradCAM()

output = explainer.explain(*explainer_args)

explainer.save(output, output_dir, output_name)

Usage - Callbacks

from tf_explain.callbacks.grad_cam import GradCAMCallback

callbacks = [

GradCAMCallback(

validation_data=(x_val, y_val),

layer_name="activation_1",

class_index=0,

output_dir=output_dir,

)

]

model.fit(x_train, y_train, batch_size=2, epochs=2, callbacks=callbacks)

Lime

- Applicable to any black-box classifier

- Works on text, tabular and image data

- Available on pypi

Usage

from lime import lime_image

explainer = lime_image.LimeImageExplainer()

explanation = explainer.explain_instance(

image, # input image

predict, # predict function of interpreted classifier

)

|

|

|

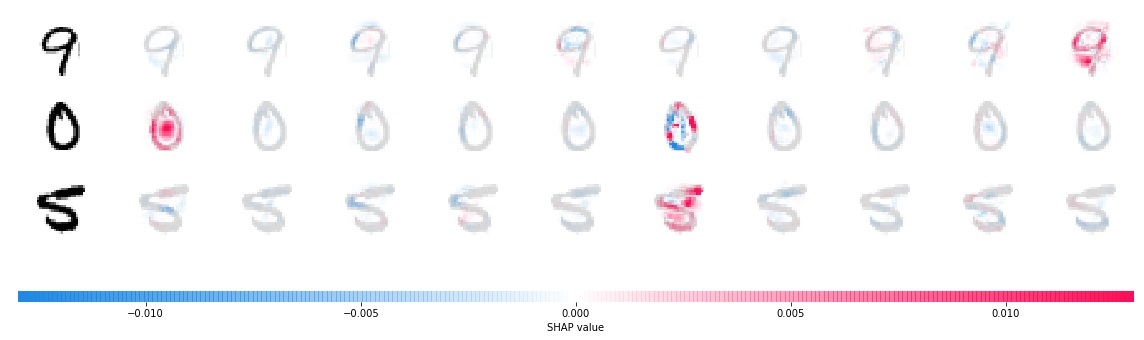

DeepShap - Usage

import numpy as np

import shap

model = ... # classifier model

images = ... # image date set

background = images[:100]

test_images = images[100:103]

explainer = shap.DeepExplainer(model, background)

shap_values = e.shap_values(test_images)

shap_numpy = [np.swapaxes(np.swapaxes(s, 1, -1), 1, 2) for s in shap_values]

test_numpy = np.swapaxes(np.swapaxes(test_images.numpy(), 1, -1), 1, 2)

shap.image_plot(shap_numpy, -test_numpy)

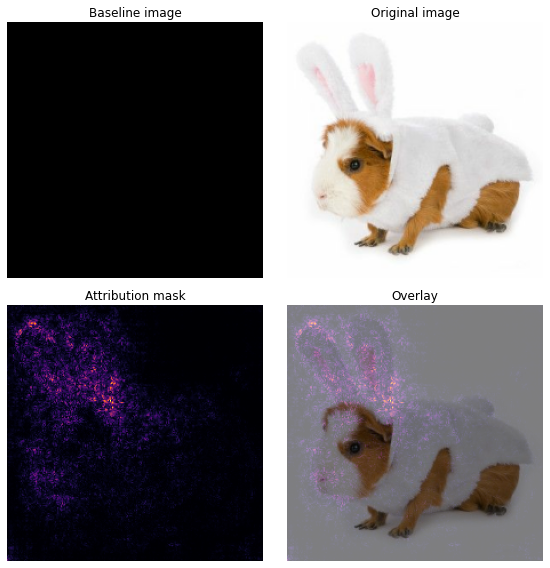

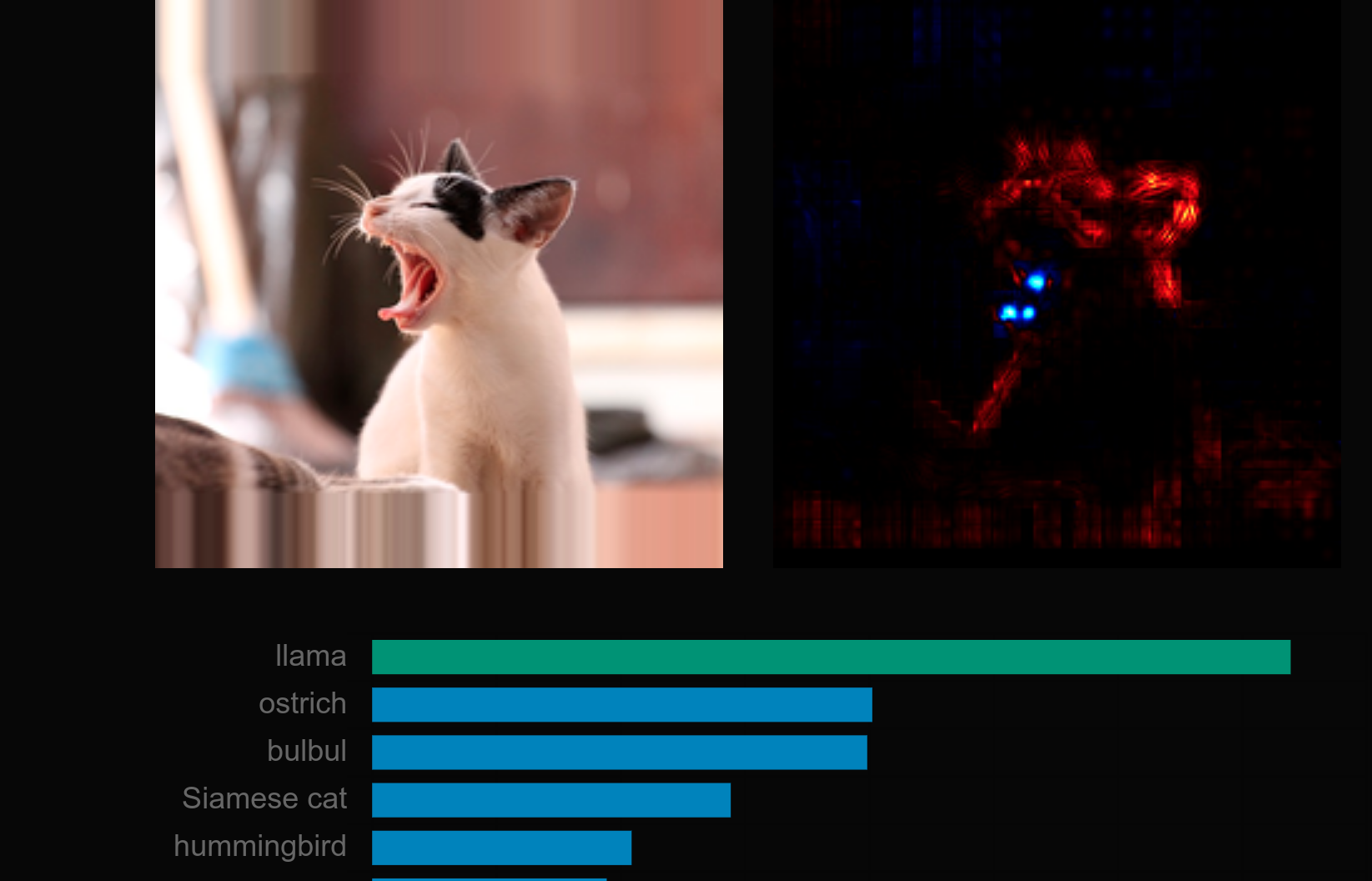

DeepShap - Example

Contribution of features to each class prediction

Contribution of features to each class prediction

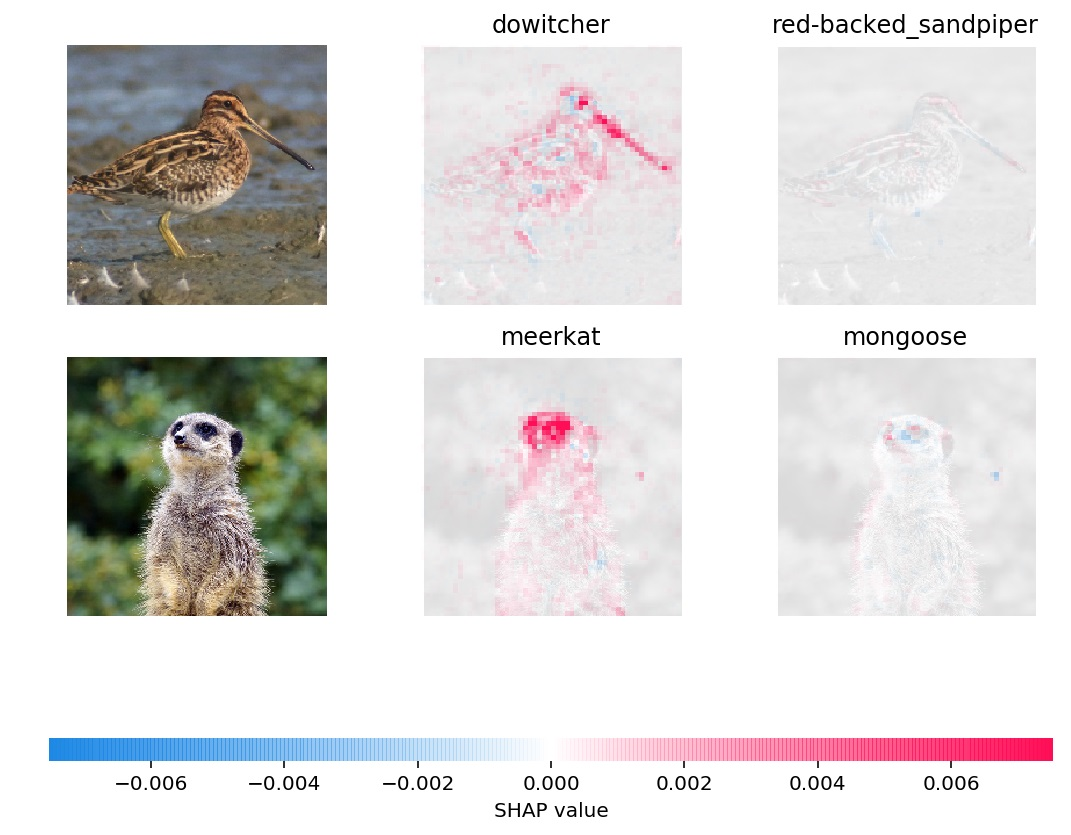

SHAP - Gradient Explainer

Combines Integrated Gradients, SHAP and SmoothGrad into single interpretation method.

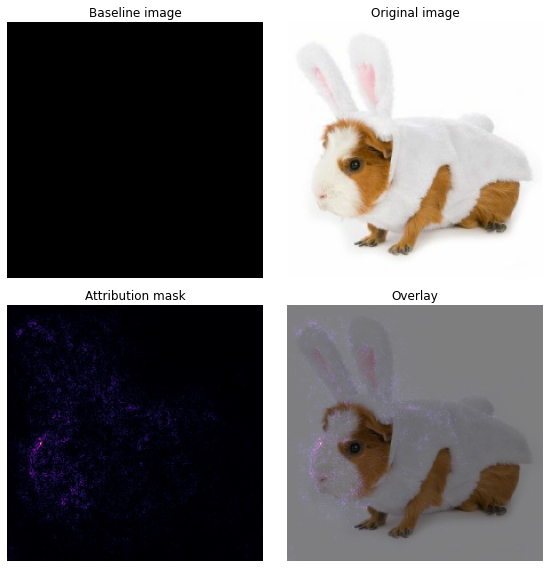

Contribution of features to 2 top predictions

Contribution of features to 2 top predictions

XAI challenges

How to evaluate explanation?

Why one explanation is better then another

Two distinct approaches

Human-grounded Measures

Computational Measures

Human-grounded Measures

Metrics like IoU

Researchers conduct surveys where they ask: "which part of data is important"

Computational Measures

Dozens of approaches

No established standards

Open research area

Infidelity

How much explanation differ given a perturbed input image

Infidelity

net = ImageClassifier()

saliency = Saliency(net)

# Computes saliency maps for class 3 for the input image.

attribution = saliency.attribute(input, target=3)

# define a perturbation function for the input

def perturb_fn(inputs):

noise = torch.tensor(np.random.normal(0, 0.003, inputs.shape)).float()

return noise, inputs - noise

infidelity(net, perturb_fn, input, attribution)

>> 0.2177

Other

We remove "important" inputs and network confidence should decrease

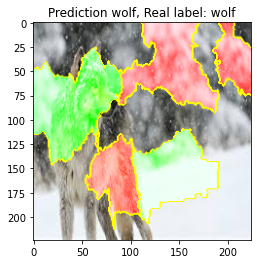

XAI challenges

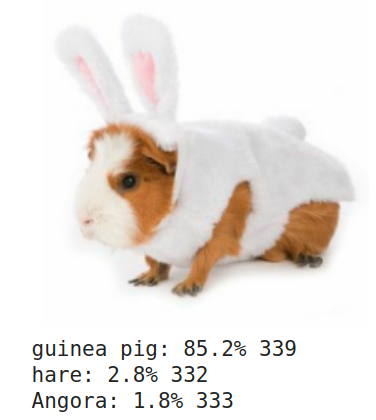

How to explain a wrong prediction

Wrong prediction

Explanation makes even less sense

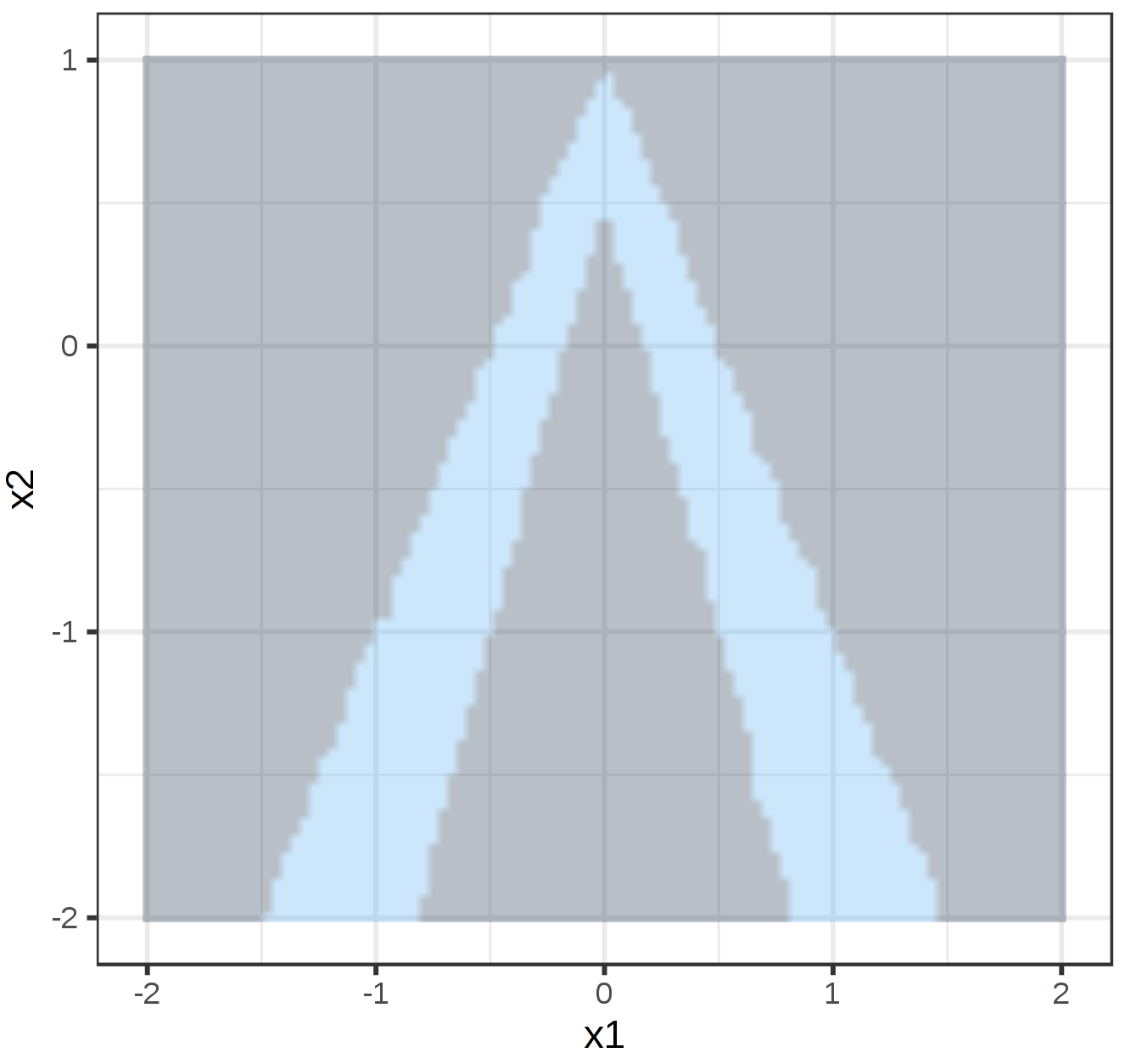

XAI challenges

Hyperparameters have a massive impact on a given explanation

Ok, I'm hyped

Where I should start my PhD

TLDR

- XAI methods doesn't always work great

- but still we need them to trust ML systems

- Available out-of-the-box implementations that will work with your model

- An open problem, many challenges

References

- "Why Should I Trust You?": Explaining the Predictions of Any Classifier - 2016, M. Ribeiro, S. Singh, C. Guestrin

- Axiomatic Attribution for Deep Networks - 2017, M. Sundararajan, A. Taly, Q. Yan

- Interpretable Machine Learning - Christoph Molnar

- Contrastive Explanations in Neural Networks - 2020 - M. Prabhushankar, G. Kwon, D. Temel, G. AlRegib

- Counterfactual Explanations & Adversarial Examples -- Common Grounds, Essential Differences, and Potential Transfers - 2020 - T. Freiesleben

- ILSVRC 2012 images visualization by Andrej Karpathy

Thanks

"There's no such thing as a stupid question!"

Kemal Erdem, Piotr Mazurek, Piotr Rarus

Presentation avalibe at: https://tugot17.github.io/XAI-Presentation

Source:

Source:  Source:

Source:  Source:

Source:  Source:

Source:  Source:

Source:

Source:

Source:  Input image

Input image

Interpretation of dog prediction

Interpretation of dog prediction